In this article we build Hive2 Server on a Hadoop 2.7 cluster. We’ve a dedicated node (HDAppsNode-1) for Hive (and other apps) with in the cluster, which is highlighted in the below deployment digram, showing our cluster model in Azure. We will keep the Hive Meta Store in a seperate MySQL instance running on a seperate host (HDMetaNode-1) to have a production grade system, rather than keeping it in the default embeded database. This article assume, you’ve already configured Hadoop 2.0+ on your cluster. The steps we’ve followed to create the cluster can be found here, which is to build a Single Node Cluster. We’ve cloned the Single Node, to multiple nodes (7 Nodes as seen below), and then updated the Hadoop configuration files to transform it to a multi-node cluster. This blog has helped us to do the same. The updated Hadoop Configuration files for the below model (Multi-Node-Cluster) has been shared here for your reference.

Lets get started.

1. Create Hive2 Meta Store in MySql running on HDMetaNode-1.

sudo apt-get install mysql-server

<Loging to my sql using the default user: root>

CREATE DATABASE hivemetastore;

USE hivemetastore;

CREATE USER 'hive'@'%' IDENTIFIED BY 'hive';

GRANT all on *.* to 'hive'@'HDAppsNode-1' identified by 'hive';

2. Get Hive2.

We are keeping Hive binaries under (/media/SYSTEM/hadoop/hive/apache-hive-2.0.0)

cd/media/SYSTEM/hadoop/hive

wget http://mirror.cc.columbia.edu/pub/software/apache/hive/stable-2/apache-hive-2.0.0-bin.tar.gz

tar -xvf apache-hive-2.0.0-bin.tar.gzmv apache-hive-2.0.0-bin apache-hive-2.0.0

cd apache-hive-2.0.0mv conf/hive-default.xml.template conf/hive-site.xml

Edit ‘hive-site.xml’, to configure MySql Meta Store and Hadoop related configurations. Please change as per your environment. (/media/SYSTEM/hadoop/tmp) is our Hadoop TMP directory in local filesystem.

Apart from that, we’d to replace all below occurances to make Hive2 work with our cluster,

${system:java.io.tmpdir}/ with /media/SYSTEM/hadoop/tmp/hive/

/${system:user.name} with /

<configuration>

<property>

<name>hive.exec.local.scratchdir</name>

<value>/media/SYSTEM/hadoop/tmp/hive/${system:user.name}</value>

<description>Local scratch space for Hive jobs</description>

</property>

<property>

<name>hive.downloaded.resources.dir</name>

<value>/media/SYSTEM/hadoop/tmp/hive/${hive.session.id}_resources</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property><property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://HDMetaNode-1/hivemetastore?createDatabaseIfNotExist=true</value>

<description>metadata is stored in a MySQL server</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>MySQL JDBC driver class</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>user name for connecting to mysql server</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>hive</value>

<description>password for connecting to mysql server</description>

</property>

</configuration>

3. Update Hadoop Config

core-site.xml (Add the below tags)

property>

<name>hadoop.proxyuser.hive.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hive.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hduser.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hduser.groups</name>

<value>*</value>

</property>

4. Setup Hive Server

Update ~/.bashrc and ~/.profile , to contain the Hive2 path

#HIVE VARIABLES START

HIVE_HOME=/media/SYSTEM/hadoop/hive/apache-hive-2.0.0

export HIVE_HOME

export PATH=$PATH:$HIVE_HOME/bin

#HIVE VARIABLES END

Refresh the environment

source ~/.bashrc

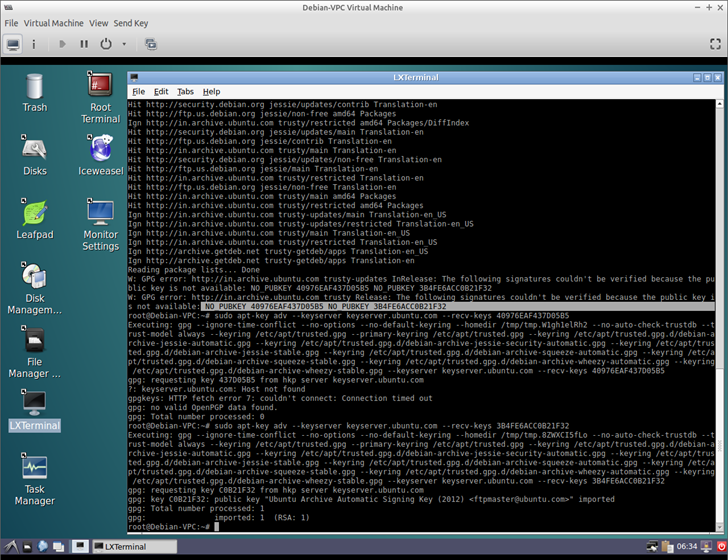

Setup and Create Meta Store in MySql (You may need to download MySqlConnector JAR file to the lib folder)

bin/schematool -dbType mysql -initSchema

Start Hive2 Server

hiveserver2