Debian is a great operating system worth a try. Its lightweight and got a great backing community. Its for more advanced users. If you want to migrate to Debian, from Ubuntu for better control or whatever reason, you may miss some good features or programs, thats distributed through the ubuntu repositories.

I recently made a choice to move to Debian Jessie LTS, from Ubuntu 14.04 LTS. When setting up ‘KVM’ with nested virtualization, I’ve found that the command ‘kvm-ok’ belong to the original Ubuntu main repository, thats not seems available in Debian. Below are the steps I’ve done to get the Ubuntu repository in Debian to install the necessary Ubuntu packages.

1. From an Ubuntu System, get the repository URL, in which the desired package belongs.

From the Ubuntu system, open ‘/etc/apt/sources.list’. Get the required repository URL. Here we’re taking the main ubuntu repository URL, in which ‘cpu-checker’ package belongs to. KVM-OK comes with ‘cpu-checker’.

Note: Please see we are using ‘trusy main’, as Trusty is based on the Debian Jessie to which we are going to import the packages. So we retain package compatibility there.

To check, which Debian version on which the ubuntu has based, see this link.

2. Add the repository URL to the target Debian source List and update.

$ echo 'deb http://in.archive.ubuntu.com/ubuntu/ trusty-updates main restricted' >> /etc/apt/sources.list

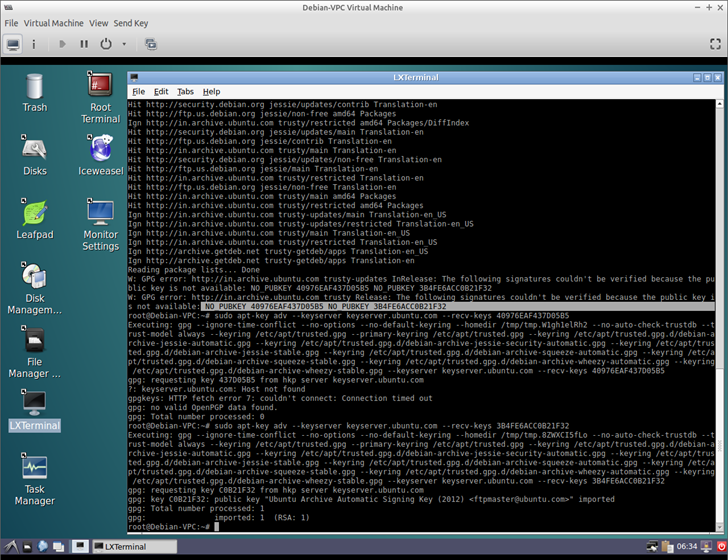

$ sudo apt-get update

You will get errors as the Ubutu specific Public Keys are not present in the Debian System. Looking at the error you can find the public keys you need to import. For eg: I’ve got the below error, after the update operation.

“W: GPG error: http://in.archive.ubuntu.com trusty-updates InRelease: The following signatures couldn't be verified because the public key is not available: NO_PUBKEY 40976EAF437D05B5 NO_PUBKEY 3B4FE6ACC0B21F32”

So I’had to run the below commands

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys 40976EAF437D05B5

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys 3B4FE6ACC0B21F32

3. Update Debain Again, and install the required package using apt-get.

sudo apt-get udpate

sudo apt-get install cpu-checker

sudo kvm-ok

INFO: /dev/kvm exists

KVM acceleration can be used