One of the nice feature that, we're quite obsessive with Microsoft.NET languages (C#, VB.net) is

'Reflection'

You might be already knowing, the capabilities of 'Reflection' in .NET. Most often, it is heavily used along with 'Custom Attributes'.

But we've found a very good use of it.

Reflection to achieve 'Late-Bindings' with our code

(Especially in scenarios, where you are integrating your application with external COM Servers, like Out Of Process Servers [EXE] or In Process Servers [DLL], which are written in a other native languages like Visual C++ [ATL or MFC])

This is not only limited to integration with 'COM Servers', but we can also leverage, 'Late-Bindings' with .NET types as well (which resides especially in other assemblies).

In other terms,

through 'Reflection' you can remove version dependency

to a referenced assembly or COM Object, in your project.

But implementing 'Version Independence' through Reflection API's is quite complex and less tidy. New 'dynamic' keyword in C# will specifically help you in that front, where you don't have to dig available methods/properties, through 'Reflection API's. It will be done under the hood.

Before coming into details, we should be aware of the below well known scenarios.;

Early Binding

and

Late Binding

Early Binding

To understand the scenario, lets take a case study, that was a real exercise for our team. We'd to create an application (.NET Windows), that should extend 'Office Outlook' notifications. i.e By default, Outlook does not support 'Desktop Notifications' for new mails, arriving at 'Secondary Mailbox's. So we're trying to add that functionality, which was long awaited by our support team, as they are having dedicated 'Secondary Mailboxes' for their support activity related mails. As of now, they need to periodically check their mailboxes for new mails. Quite tedious right? So if we can show the typical 'Outlook Mail Notifications' , for new mails arriving at 'Secondary Mailboxes', that will be a huge time saver for our support team.

So obviously, we need to create an application (.NET Windows), integrated with 'Office Outlook'. As you would know, Office Outlook is a 'COM Server' (Out Of Proc -EXE). At the time of development, we all had Office Outlook 2007 installation in our machines. So we just took the easy way out. We created the .NET project, and added the 'Office Outlook 2007' COM Reference from the COM tab. The implementation was quite easy and we completed the project in weeks.

Deployment commenced, and the support team was quite happy with the features. They don't have to periodically scan the mailbox anymore! A few more months passed, until the most inevitable happened!

IT Infrastructure Team, had rolled out a new version of Office (Office 2012). So Office Outlook 2007, has been upgraded to Office Outlook 2012. For our surprise, our application crashed.

Why? This is a

'Early Binding' scenario, where our application requires the dependencies (in this case Outlook) to be available with exact version, that had referenced during the development of the application.

So what could be the solution? Simple, Just recompile the project, by changing reference to Office Outlook 2012, instead of Office Outlook 2007. But what will happen, if a new version of Office (Office365) will be deployed again. Every time, we've to recompile and deploy our application.

Obviously this is not a real solution. The actual solution is 'Late Binding'

Late Binding

If we can access 'Office Outlook' at run time, without caring the version, that could be the most viable solution. We don't have to recompile the application every time, when a new upgrade of Office happens.

This 'Late Binding' is achieved through a technique called 'Reflection' in .NET.

The application we've just talked about is detailed in this blog. It has been completely implemented through '.NET Reflection' and it will work with any version of Office Outlook (2007, 2012, 365 etc). If you're curious on the implementation, you can also download the source from the above link.

OK that's about the whole theory. Now if you explore '.NET Reflection' API's, it is quite difficult to implement (We will soon get into that with a real example). That means we are compromising 'Ease Of Implementation' for achieving 'Version Independence'. Can we've best of both the worlds?

i.e 'Ease of Implementation' and 'Version Independence' . Yes you can, with 'dynamic' feature of C#

We can understand the concepts with a simple example and code walk through.

Code Sample (Early Binding [Assembly Reference] ---> Late Binding [Reflection] ---> Late Binding [Dynamic] )

For this exercise, we will try to do the below with 'Office Word' (A COM Server written in Visual C++ !). A simple Office Word Automation from a .NET Program!

From our .NET application, we will perform the below,

a. Create the Word Application Object

b. Create a Blank Word Document Object

c. Make the 'Word' application visible

d. Close the Word application

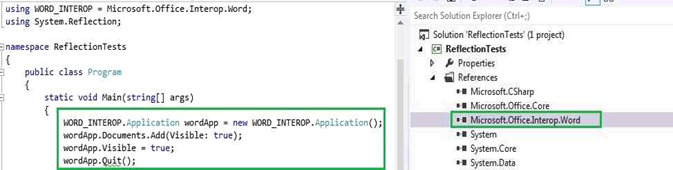

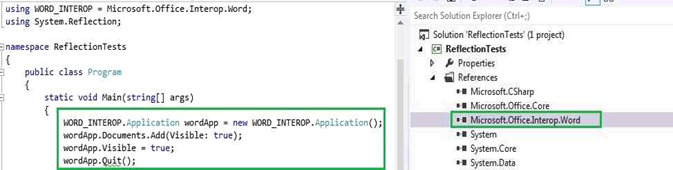

Early Binding Implementation:

As we've already discussed, we've a static dependency to 'Interop.Word' (in our case it is Office Word 2007). But the implementation is quite straightforward and easy.

Late Binding Implementation (Reflection):

As you can see, the implementation is quite lengthy and tedious with 'Reflection'. But see the best part of it, You've not even referenced, 'Office Word Interop' in the 'References' section (See right hand side of the figure) and that is why it is working with every versions of 'Office Word'.

Late Binding Implementation (Dynamic):

As you can see with 'dynamic' keyword, you have best of both the worlds. You don't have the dependency to the 'Office Word Interop' (See right hand side of the fig) and at the same time, You've a easy implementation like 'Early Binding' scenario.

Conclusion:

'Early Binding' (Static Dependencies) may be a problem while referring COM/External assemblies in your .NET program.

'Late Binding' can resolve this dependency issue, with 'Reflection'.

But 'Reflection' makes your code lengthier and complex.

The 'dynamic' keyword brings you best of both the world of 'Early Binding' and 'Version Independence'

Comments, Suggestions! Most Welcome!