This is part II of the series about Hadoop @ Desk. Hope you’ve gone thorough the setup prerequistes in Part I, and are ready to continue with Hadoop.2.0+ setup.

As discussed in part I, I’ve setup my permanent desktop OS (Host OS. Its also dual booting with Windows7) as Lubuntu 14.04 LTS. I’ve also setup Type1 virtualization suite, Qemu-KVM inside my Host. I’m using the exact same OS (Lubuntu 14.04 LTS), for my Guest as well. I’ve setup my Guest using Virt-Manager.1.0 UI.

I’ve compiled the steps based on some external blogs (read here, here and here).

Power On your guest now. Steps below; (All steps are done on your Guest, bash command line)

A. Settingup base environment for Hadoop

1. Update your system sources

$ sudo apt-get update

2. Install Java (Open JDK)

$ sudo apt-get install default-jdk

3. Add a dedicated Hadoop group and user. Then add hadoop user to the ‘sudo’ group.

$ sudo addgroup hadoop

$ sudo adduser --ingroup hadoop hduser

$ sudo adduser hduser sudo

4. Install Secure Socket Shell (ssh)

$ sudo apt-get install ssh

5. Setup ‘ssh’ certificates

$ su hduser Password:

$ ssh-keygen -t rsa -P ""

$ cat $HOME/.ssh/id_rsa.pub >> $HOME/.ssh/authorized_keys$ ssh localhost

The 3rd command adds the newly created key to the list of authorized keys so that Hadoop can use ssh without prompting for a password.

Note: While prompting for the file name, just hit enter to accept the default file name.

At this point, snapshot your VM and restart the VM.

B. Settingup Hadoop

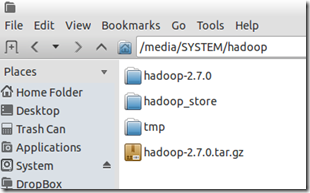

Now we’ve setup the basic environment, which requires by Hadoop. Now we will move on to install Hadoop.2.7. Please note that I am setting up the entire Hadoop on a dedicated ext4 partition (Not the root partition) for better management. You need to replace this path, as per your environment. (i.e. replace /media/SYSTEM as per your environment).

Note: I’ve underlined the paths in the below steps, that you need to replace with your own.

6. Get the core Hadoop Package (Version 2.7). Unzip it then move to our dedicated Hadoop partition.

$ sudo chmod 777 /media/SYSTEM

$ wget http://apache.mesi.com.ar/hadoop/common/hadoop-2.7.0/hadoop-2.7.0.tar.gz

$ tar xvzf hadoop-2.7.0.tar.gz$ sudo mv * /media/SYSTEM/hadoop

$ sudo chown -R hduser:hadoop /media/SYSTEM/hadoop

Also create a ‘tmp’ folder, which will be used by hadoop later.

$ sudo mkdir -p /media/SYSTEM/hadoop/tmp $ sudo chown hduser:hadoop /media/SYSTEM/hadoop/tmp

C. Settingup Hadoop Configuration Files

The following files will have to be modified to complete the Hadoop setup: (Replace the paths based on your environment)

~/.bashrc

/media/SYSTEM/hadoop/hadoop-2.7.0/etc/hadoop/hadoop-env.sh

/media/SYSTEM/hadoop/hadoop-2.7.0/etc/hadoop/core-site.xml

/media/SYSTEM/hadoop/hadoop-2.7.0/etc/hadoop/mapred-site.xml.template

/media/SYSTEM/hadoop/hadoop-2.7.0/etc/hadoop/hdfs-site.xml

7. Update .bashrc

Before editing the .bashrc file in our home directory, we need to find the path where Java has been installed to set the JAVA_HOME environment variable using the following command:

$ update-alternatives --config java

Append the below to the end of .bashrc. Change ‘JAVA_HOME’ and ‘HADOOP_INSTALL’, as per your environment.

$ vi ~/.bashrc

#HADOOP VARIABLES START

export JAVA_HOME=/usr/lib/vm/java-7-openjdk-amd64

export HADOOP_INSTALL=/media/SYSTEM/hadoop/hadoop-2.7.0

export PATH=$PATH:$HADOOP_INSTALL/bin

export PATH=$PATH:$HADOOP_INSTALL/sbin

export HADOOP_MAPRED_HOME=$HADOOP_INSTALL

export HADOOP_COMMON_HOME=$HADOOP_INSTALL

export HADOOP_HDFS_HOME=$HADOOP_INSTALL

export YARN_HOME=$HADOOP_INSTALL

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_INSTALL/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_INSTALL/lib/native"

#HADOOP VARIABLES END

8. Update ‘hadoop-env.sh’

We need to set JAVA_HOME by modifying hadoop-env.sh file. Adding the above statement in the hadoop-env.sh file ensures that the value of JAVA_HOME variable will be available to Hadoop whenever it is started up.

$ vi /media/SYSTEM/hadoop/hadoop-2.7.0/etc/hadoop/hadoop-env.sh export JAVA_HOME=/usr/lib/jvm/java-7-openjdk-amd64

9. Update ‘core-site.xml’

The ‘core-site.xml’ file contains configuration properties that Hadoop uses when starting up. This file can be used to override the default settings that Hadoop starts with.

Open the file and enter the following in between the <configuration></configuration> tag:

$ vi /media/SYSTEM/hadoop/hadoop-2.7.0/etc/hadoop/core-site.xml

<configuration> <property> <name>hadoop.tmp.dir</name> <value>/media/SYSTEM/hadoop/tmp</value> <description>A base for other temporary directories.</description> </property> <property> <name>fs.default.name</name> <value>hdfs://localhost:54310</value> <description>The name of the default file system. A URI whose scheme and authority determine the FileSystem implementation. The uri's scheme determines the config property (fs.SCHEME.impl) naming the FileSystem implementation class. The uri's authority is used to determine the host, port, etc. for a filesystem.</description> </property>

<property>

<name>dfs.permissions.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions.superusergroup</name>

<value>hadoop</value>

</property></configuration>

10. Update ‘mapred-site.xml’

Create the ‘mapred-site.xml’ from existing template available with Hadoop installation:

$ cp /media/SYSTEM/hadoop/hadoop-2.7.0/etc/hadoop/mapred-site.xml.template /media/SYSTEM/hadoop/hadoop-2.7.0/etc/hadoop/mapred-site.xml

The mapred-site.xml file is used to specify which framework is being used for MapReduce.

We need to enter the following content in between the <configuration></configuration> tag:

<configuration> <property> <name>mapred.job.tracker</name> <value>localhost:54311</value> <description>The host and port that the MapReduce job tracker runs at. If "local", then jobs are run in-process as a single map and reduce task. </description> </property> </configuration>

11. Update ‘’hdfs-site.xml’

The ‘/media/SYSTEM/hadoop/hadoop-2.7.0/etc/hadoop/hdfs-site.xml’ file needs to be configured for each host in the cluster that is being used.

It is used to specify the directories which will be used as the namenode and the datanode on that host.

Before editing this file, we need to create two directories which will contain the namenode and the datanode for this Hadoop installation.

This can be done using the following commands:

$ sudo mkdir -p /media/SYSTEM/hadoop/hadoop_store/hdfs/namenode $ sudo mkdir -p /media/SYSTEM/hadoop/hadoop_store/hdfs/datanode $ sudo chown -R hduser:hadoop /media/SYSTEM/hadoop/hadoop_store

Open the file and enter the following content in between the <configuration></configuration> tag:

hduser@laptop:~$ vi /media/SYSTEM/hadoop/hadoop-2.7.0/etc/hadoop/hdfs-site.xml <configuration> <property> <name>dfs.replication</name> <value>1</value> <description>Default block replication. The actual number of replications can be specified when the file is created. The default is used if replication is not specified in create time. </description> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/media/SYSTEM/hadoop/hadoop_store/hdfs/namenode</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/media/SYSTEM/hadoop/hadoop_store/hdfs/datanode</value> </property> </configuration>

At this point, snapshot your VM and restart the VM to make the change in effect.

D. Format the HDFS file system

Now, the Hadoop file system needs to be formatted so that we can start to use it. The format command should be issued with write permission since it creates current directory under ‘/media/SYSTEM/hadoop/hadoop_store/hdfs/namenode’ folder:

12. Format Hadoop file system

$ su hduser

$ hadoop namenode –format

Note that hadoop namenode -format command should be executed once before we start using Hadoop. If this command is executed again after Hadoop has been used, it'll destroy all the data on the Hadoop file system.

E. Start/Stop Hadoop

Now it's time to start the newly installed single node cluster.

13. Start Hadoop

We can use start-all.sh or (start-dfs.sh and start-yarn.sh)

$ start-all.sh

We can check if it's really up and running:

$ jps

You can also verify by hitting the below URL in the browser.

http://localhost:50070/ - web UI of the NameNode daemon

14. Stop Hadoop

We run stop-all.sh or (stop-dfs.sh and stop-yarn.sh) to stop all the daemons running on our machine:

$ stop-all.sh

15. Test your installation

For testing I’ve moved a small file to HDFS file system and displayed it content from there.

16. Snapshot your VM

Now you’ve a working version of Hadoop. Snapshot your VM at this point, so that if you’ve any issues with future experiments, you can roll back the VM to this very working state of Hadoop.

No comments:

Post a Comment