It is quite plausible to host Docker Clusters on top of Linux Containers such as LXD. This will be an ideal setup on your local desktop, to simulate a production cluster which will predominantly hosted on top of Virtual Machines (instead of Linux Containers). Using Linux Containers locally have some obvious advantages

a. They are like light weight Virtual Machines (aka Containers on steroids/System Containers)

b. No overhead of Virtual Machines/Guest OS

c. Incredibly fast to boot, less foot print on memory and CPU

d. Quick to copy, clone, snapshot which takes less disk space

e. You can run a dozens of them in a desktop unlike one /two virtual machines.

This is the paramount reason, you opt LXD to simulate a production cluster in local environments.

The below figure shows, how this has been organized in our desktop environment

Setting up such a cluster has been detailed below:

1. Setting up the Desktop (Host Machine)

1.1 Setting up LXD on ZFS

Our host system has Lubuntu 16.04 LTS, with LXD installed on top of ZFS file system. AUFS kernel module has been enabled, so that same will be available to LXD Containers and Docker Engine. AUFS should be made available, so that Docker can use aufs storage driver (instead of vfs) to manage its layered union filesystem, backed by the underlying ZFS.

With regards to networking, we’re using a prebuilt bridge instead of LXD’s default, so that LXD containers can be accessible from anywhere in our LAN. This prebuilt bridge (vbr0) has already been bridged with the host machine’s network adapter (eth0)

All our LXD containers will be based on Ubuntu 16.04 LTS (xenial) image. Run commands from host machine console.

sudo modprobe aufs sudo apt-get install zfs sudo zpool create -f ZFS-LXD-POOL vdb vdc -m none sudo zfs create -p -o mountpoint=/var/lib/lxd ZFS-LXD-POOL/LXD/var/lib sudo zfs create -p -o mountpoint=/var/log/lxd ZFS-LXD-POOL/LXD/var/log sudo zfs create -p -o mountpoint=/usr/lib/lxd ZFS-LXD-POOL/LXD/usr/lib sudo apt-get install lxd sudo apt-get install bridge-utils brctl show vbr0 lxc profile device set default eth0 parent vbr0 lxc profile show default lxc image list images: lxc image copy images:ubuntu/xenial/amd64 local: --alias=xenial64 lxc image list |

#/etc/network/interfaces auto vbr0 iface vbr0 inet dhcp bridge-ifaces eth0 bridge-ports eth0 up ifconfig eth0 up iface eth0 inet manual |

1.2 Setting up Docker-Machine and Docker Client

Provisioning the LXD Containers with Docker is done through Docker-Machine, which automatically install Docker-Engine and related tools. Hence we should install Docker-Machine on our host machine. Run from host machine console.

#https://github.com/docker/machine/releases sudo wget -L https://github.com/docker/machine/releases/download/v0.13.0/docker-machine-`uname -s`-`uname -m` sudo mv docker-machine-`uname -s`-`uname -m` /usr/local/bin/docker-machine sudo chmod +x /usr/local/bin/docker-machine sudo apt-get install docker.io |

NB: Once you install the docker.io (though it installs both docker-engine and client, we only need client in host machine), it will add a DROP IP rule (for any forwarded packets) to the IP Table rule chain to have the isolated Docker network. As we don’t intend the host to be a docker host, we should reverse the above rule. Otherwise Docker Containers inside the LXD Containers won’t be able to forward the packets through the host machine, and hence pull images won’t work from internet inside the LCD containers.

Long story short, run the below command in host machine to reverse the rule to the original.

sudo iptables -P FORWARD ACCEPT |

2. Preparing LXD Containers for Docker Hosting

Before using LXD containers, it has to be prepared for Docker use. This involves making the container as privileged and supplying Docker required Kernel modules.

This involves;

2.1 Creating the container (CNT-1 and CNT-2)

2.2 Attaching Docker required profile configurations to containers

2.3 Create a user (with root privileges) with password less SUDO access

2.4 Setup SSH Server with Public Key Authentication

#Run from host machine console lxc launch xenial64 CNT-1 -p default -p docker #Docker required settings in LXD – Very Important!!! lxc config set CNT-1 security.privileged true lxc config set CNT-1 security.nesting true lxc config set CNT-1 linux.kernel_modules “aufs, zfs, overlay, nf_nat, br_netfilter” lxc restart CNT-1 lxc exec CNT-1 /bin/bash #Run from LXD (CNT-1) container console sudo touch /.dockerenv adduser docusr usermod -aG sudo docusr sudo vi /etc/sudoers #append line: docusr ALL=(ALL) NOPASSWD: ALL groupadd –r sshusr usermod –a –G sshusr docusr sudo apt-get install openssh-server sudo cp /etc/ssh/sshd_config /etc/ssh/sshd_config.original sudo vi /etc/ssh/sshd_config #append line: AllowGroups sshusr exit #Run from Host Machine (Desktop) console ssh-keygen -t rsa ssh-copy-id docusr@<IP Of CNT-1> |

Note: We’ve created an empty .dockerenv file at the root of the file system (/). This is required to make the Docker Swarm Clustering work for Overlay networking as per this link.

#Above sample for one LXD container (CNT-1), repeat for CNT-2

3. Docker Machine to provision LXD Containers

Docker does not have a native driver for LXD, hence we’re using the generic driver which leverages the SSH to provision the container. Run below from host machine console.

docker-machine create \ --driver generic \ --generic-ip-address=<IP Of CNT-1> \ --generic-ssh-user "docusr" \ --generic-ssh-key ~/.ssh/id_rsa \ cnt-1 |

This will automatically provision LXD Container (CNT-1) with Docker and related tools.

4. Docker Client CLI, to manage Docker Containers

All set. Run below from host machine console.

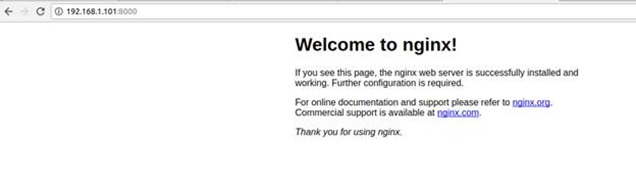

Point to LXD Container (CNT-1) and Run NGINX webserver in a container and access the same from a browser anywhere from your LAN

docker-machine env cnt-1 eval "$(docker-machine env cnt-1)" docker run -d -p 8000:80 nginx curl $(docker-machine ip cnt-1):8000

#or take browser and navigate to http://<IP of CNT-1>:8000 docker-machine env -u |

Download Commands and Reference Links